Pix4D creates professional photogrammetry software for drone mapping. The people who use our products include surveyors, farmers, and researchers, and they use the software on a range of platforms including desktop, mobile, and cloud. Pix4D’s continuous integration (CI) team is based in the headquarters in Lausanne, Switzerland, where we design and maintain the systems used by our developers to automatically build, test, and package our suite of products.

Our CI system is designed around the paradigm of infrastructure as code. We manage the system’s resources using text-based, version-controlled configuration files and automation tools such as Terraform and Salt. All resources are located in a virtual private cloud (VPC) and hosted by Amazon Web Services (AWS). We use Concourse CI as our CI software because it is fully configurable by YAML files and scripts that may be shared, reviewed, and tracked in version control as an application code base would be managed. In addition, each task that is run on a Linux platform is executed inside a Docker container which provides the isolation that is necessary for reproducible builds.

Recently our developers began building some of our software products for macOS. This meant that we had to find a way to incorporate macOS builds into our CI environment with Concourse.

Integrating macOS builds into our existing CI

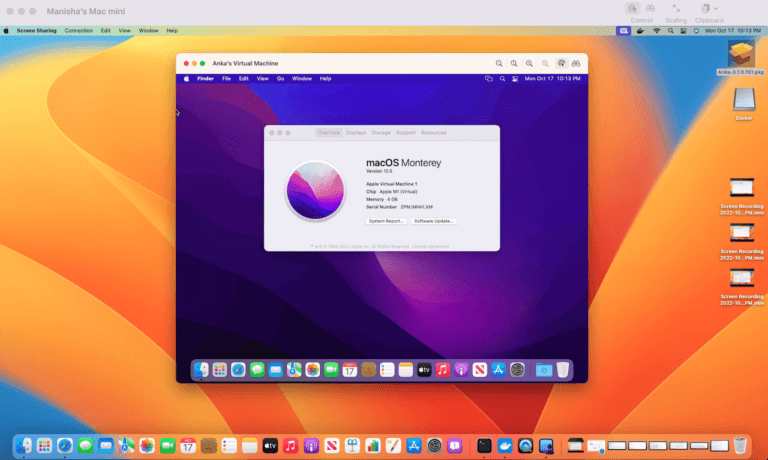

The first challenge we faced in incorporating macOS builds into our CI was finding the right infrastructure. The services provided by our current CI are running as AWS Elastic Cloud Compute (EC2) instances. Unfortunately, macOS images are not available for EC2. This meant that we would need to either subscribe to a separate service or self-host our own machines. We ultimately decided that we would have the most flexibility by hosting a number of on-premises Mac Minis and integrating them into our existing cloud.The second (and more important) problem was determining how we could create an isolated build environment on the Mac. Concourse uses Docker containers for isolation on Linux. A number of Linux instances are registered with the Concourse task scheduler, and the scheduler sends them jobs to do in the form of Docker containers and volumes. On the Mac, however, Concourse executes builds directly in the host machine. This increases the chances for environmental drift and flakiness, ultimately reducing our ability to maintain the system and scale up our services. The solution to this problem was provided by Anka, a macOS virtualization technology for container DevOps by Veertu, Inc.

Anka and Concourse

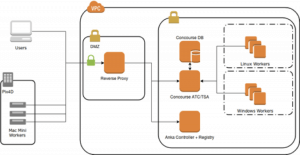

Infrastructure – The primary components of our CI system are displayed in the following figure.

Our local cluster of Mac minis is connected to the VPC through a single entry point – the reverse proxy. The individual Macs register directly with the Anka controller and registry inside the VPC, both of which are hosted on a Linux EC2 instance. The Anka Build controller spawns and destroys VM instances on the Mac minis while requests to spawn or destroy an instance are made through the controller’s REST API.

The Anka Build Registry acts as a store of VM images. We pre-bake images with both the Concourse binary and the necessary tools and libraries to build our software, then push the images to the registry. If a Mac mini does not yet have an image saved locally, it will automatically download the image from the registry when a new VM is requested.

A startup script registers each newly created Anka VM instance as a worker with the ATC, which is the term that Concourse uses to refer to its task scheduler. Once registered, the worker is ready to receive data and build instructions from the ATC and stream the artifacts back into the VPC where they are ultimately stored in Amazon S3 buckets.

Creating new macOS VM templates

We streamline the creation of new Anka VMs with Veertu’s own builder extension for Packer by Hashicorp. A single Mac mini in our cluster is reserved for building new images. The creation of a new VM image starts with the execution of a script that handles the transfer of the necessary configuration files to the Mac builder via SSH. The same script then launches the packer build process directly on the Mac builder. Packer derives each new VM image from a base VM that mimics a fresh install of macOS. The result is a new Anka VM image which is pushed to the Anka registry inside the VPC, thereby making it available to each Mac host within the cluster.This method of creating Anka VMs follows the infrastructure as code paradigm because the provisioning of the VM is configured with files that are parsed and executed by Salt. Another advantage is that the configuration of the VMs is stored in version control and old VM images may be stored on the registry in case we need to revert any changes to our CI.

Dynamic provisioning of the worker VMs

We faced another engineering challenge when it came to provisioning: how should dynamic information be provided to the newly spawned VMs? Such information includes the Concourse worker name and the cryptographic keys that are necessary to register with the ATC. We especially did not want to bake the keys into the VM images as this would pose a security risk. Fortunately, the Anka Controller REST API allows us to pass a startup shell script to each VM. A condensed version of the startup script’s Jinja template follows. cat >> EOF | sudo tee /srv/pillar/bootstrap.sls

{{ anka.anka_vm_pillar }}

EOF

sudo INSIDE_ANKA_VM="TRUE" salt-call --local state.apply

Two important events occur in the lifetime of this template. The first event is the rendering of the template which occurs inside the VPC on the Anka controller instance. The cryptographic keys and ATC endpoint are dynamically pulled from a secure parameter store when the Anka controller is provisioned on AWS. Importantly, this data is interpolated directly into the script at the line {{ anka.anka_vm_pillar }}.

The second event occurs immediately after a new VM is spawned. The script is passed from the controller to the new VM and executed, which directly injects the dynamic data into the VM instance’s pillar. (Pillar is the term used by the Salt provisioner for a static data store.) The Salt states that register the worker with Concourse are then applied to the VM by setting the INSIDE_ANKA_VM environment variable to TRUE and running salt-call –local state.apply.

After a successful salt-call, the new Concourse Mac worker should be registered with the Concourse ATC and ready to work.

Current limitations

There are currently two limitations to the setup described above.- On Linux, in which each Concourse task runs in a container, data is streamed between containers within the VPC. In the case of Anka and the Mac, the workers need to receive data from and send data to the VPC. This can incur some latency and bandwidth issues due to the Mac minis’ physical separation from the AWS hardware. We were able to work-around this limitation by shaping the traffic around our Mac cluster in our office’s router. We also expect that changes in streaming behavior in the upcoming Concourse releases to further improve streaming performance.

- Concourse currently does not support on-demand VMs for the Mac. To prevent drift of the Build environment, a Cron job on the Anka controller respawns the VMs once per day. We found that this frequency was enough to keep our build environment fresh.

Summary

In summary, we use Veertu, Inc.’s Anka virtualization technology to achieve well-isolated build environments for macOS builds. The unique circumstances regarding Apple license agreements meant that we needed to design a custom solution that integrated the Macs into our current CI system with Concourse on AWS. Despite this challenge, we were able to launch Concourse Mac workers inside Anka VMs that are running on an on-site cluster of Mac minis. The VMs are connected to the Concourse ATC inside our private cloud using credentials that are dynamically provisioned by the Anka controller.Overall, Anka has provided us symmetry with our Linux builds in Concourse.