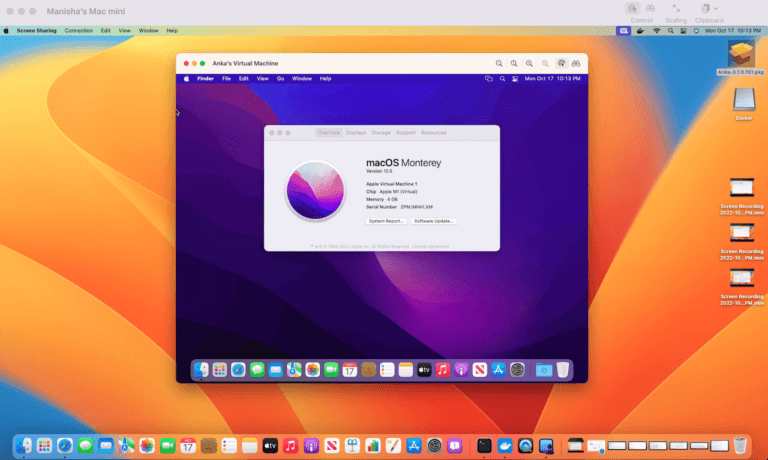

Starting in Anka 3.1 we announced that Anka is now able to fully automate the macOS installation processes, disabling SIP, and enabling VNC — all previously manual steps users had to perform inside o the VM. At the time of writing this article,...

Starting in Anka 3.2, we’ve introduced a solution for scripting macOS UI user actions. You may ask, “Why would I want to do that?”. Well, often macOS configuration and applications do not have a CLI allowing you to perform certain actions...

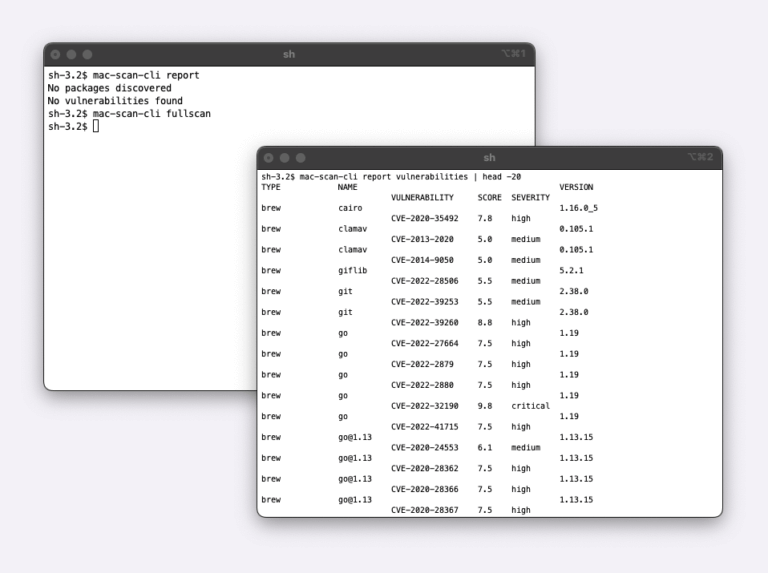

Today, we are announcing the Beta availability of the Mac Scan solution. Mac Scan software runs on macOS systems (bare metal, virtual, EC2 Mac) and scans downloads in real time for security vulnerabilities. There are multiple scenarios why you would want...

We are very happy to announce the General Availability of Anka 3.1 for Apple Silicon / ARM macs. In this release, we are taking our approach to iOS CI automation one step further by introducing a Behavior-Driven macOS UI Automation Framework in Anka,...

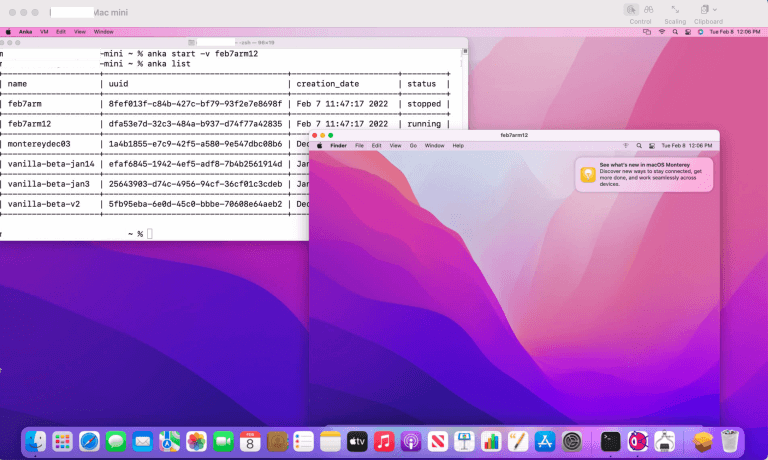

In this blog, we will cover the key topics for migrating from Anka on Intel to Anka on M1/M2 Macs. Anka is an IaaC solution from Veertu to set up an agile Container like CI for iOS CI using macOS VMs. Anka for Intel uses Apple’s Hypervisor.Framework virtualization...

We are excited to announce the General Availability of the world’s first security vulnerability scanner for EC2 Mac AMIs. EC2 Mac AMI Scan scans Intel and Apple Silicon macOS EC2 AMIs, detects security vulnerabilities in third-party packages, dependencies,...

It's time to migrate your iOS CI from ESXi Virtual Mac Infrastructure to native macOS Virtualization

When VMWare ESXi started officially supporting Apple macOS Virtualization on Mac hardware in late 2012, it opened the doors for the possibility of iOS development to move to a Linux-like, agile, scalable CI infrastructure. Soon enough, many iOS enterprise...

We are excited to announce the general availability (GA) of Anka Scan v1.0.0. As development teams increasingly adopt Infrastructure-as-code for development and production, the incident with Log4J in December 2021 highlighted the importance of security...

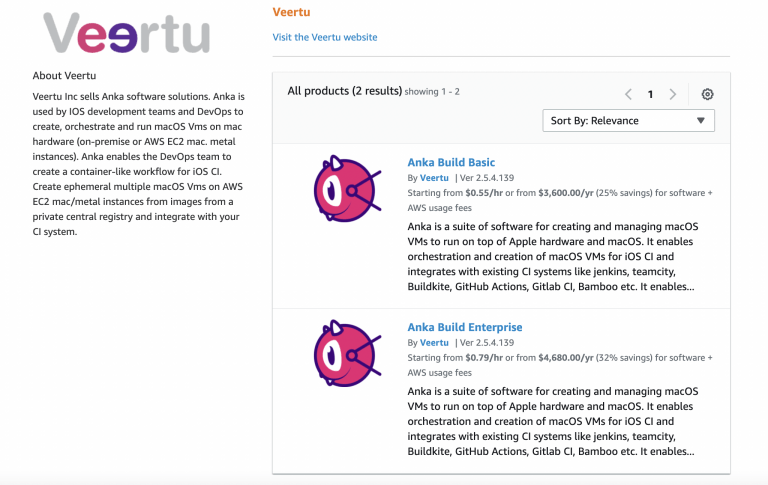

We are announcing the availability of Anka software with hourly usage pricing for AWS EC2 Mac deployments to align with currently available ec2-mac instance usage models. Why do users need to run Anka on top of ec2-mac instances? AWS ec2-mac instance...

No posts found